How might we use MVPs in the corporate world?

"MVP's are no good for us, what we need are scaled products". I'm sat in a board room in Berlin listening to a senior leader who is looking out over the sweeping view of the Berlin skyline...

Read time: 18 minutes (or 3 minutes if you watch the video instead)

"MVP's are no good for us, what we need are scaled products".

I'm sat in a board room in Berlin listening to a senior leader who is looking out over the sweeping view of the Berlin skyline. This sentiment is widely held. Other clients have even told me they have banned the use of the word as it leads to "lazy thinking".

It's an understandable opinion. True business value doesn't come from small scale - it comes from rolling out change broadly across your whole business. Sometimes it can feel like your whole team has somehow forgotten this and is tinkering in some dark corner with a product that will have an aggregate userbase of a single client and Bob from accounting.

Unfortunately, this anti-MVP opinion is also dangerous - at least if your goal is to ever successfully reach scale.

If you're keen for a quicker version of this article, check out the video version below. Otherwise, let's keep going.

Let's rewind a little.

The term MVP stands for "Minimum viable product" and was first popularized by Eric Reis in his 2011 book "the Lean startup". For the sake of historic accuracy I'll point out that he didn't invent it, that honor goes to Frank Robinson ten years earlier - but Eric was the one who really brought it to life.

The lean startup is, slightly unsurprisingly, focused on start ups, most of which have some kind of fundamental hypothesis that they need to prove. For most, this was fairly simple: "Does my product solve a real problem my target customers have and will they pay to solve it?". Eric called this effort searching for product/market fit. In the service of proving their hypothesis, it was very likely that the startup in question would need to build something.

So far, so good. What Eric had noticed however is that thanks to a deep rooted human nature that rejects releasing anything that wasn't "perfect", startups would spend months, if not years building an initial product to prove their key hypothesis - if indeed they had defined a hypothesis at all, which most hadn't. The challenge? Most of the time the startup would spend so long building a product to prove the hypothesis (or come up with one) that they would run out of cash. Startups in general have a 90% fail rate. Go figure.

Putting a microscope on the products that startups released (including from his own experience), Eric realized that this lack of focus on a hypothesis led to startups building bloated products that either contained huge chunks of unneeded features or relied on unproven assumptions about how customers would behave, whether data would be available or indeed whether certain tech would even work.

Having spent the last 20 years building both corporate products and startups I can confirm that this is a challenge shared by both. In fact, if anything corporates tend to be the worse offenders thanks to their deeper pockets - they can keep building the wrong thing longer without anyone noticing.

How do we stop this ingrained desire to over engineer? In Eric's lean startup, the MVP answered this by ensuring we only built the minimum viable product required to prove our hypothesis. Nothing more, nothing less and the simpler it was the less time and money it would take to prove it and move on. Moving on meant either pivoting the startup to something else or doubling down and continuing on a journey to scale.

Fast forward

The MVP definition used by many in a corporate setting holds no such analytical rigor and is indeed lazy - it's become a banner for something throwaway or low quality - "why would I want to release something like that?" would be a fair response.

This is a pity, as when properly designed, MVP's can deliver highly valuable, validated learning (which is truly your only achievable target in general). However, if we are lazy, and don't ensure we know what we are proving before we start to build, then it can seem to take us even further from the goal of scaled benefit than before.

Long, hard, slogging experience, that lead to board room battles, sleepless nights or like Eric Reis in the opening section of the Lean Startup: full on screaming matches in the rain, have allowed those of us who have dedicated our lives to building product to understand that just going out and declaring you want to build a successful, scaled product from the get go is simply not going to work. Creating value using products (digital or otherwise) turns out to be really, really hard and going from zero to hero in one foul swoop, crushingly hard.

Momentum towards scaled success

To avoid this expensive defeat, we build modern products in iterations. These iterations are ongoing, you don't sign off one and expect it to be the end of the road. After the first version of twitter got wide spread usage, Jack Dorsey and the team didn't roll into the office and declare to the product team: "Pack it up guys, we've cracked it - you guys will need to find another job". No, if a product is worth building and gains traction then your first version is purely the first step.

For each one of the iterations you then move through, you're going to need to make sure you can describe what hypothesis you are trying to prove and make sure your product build is focused on proving it. If you don't do this, you are probably burning money.

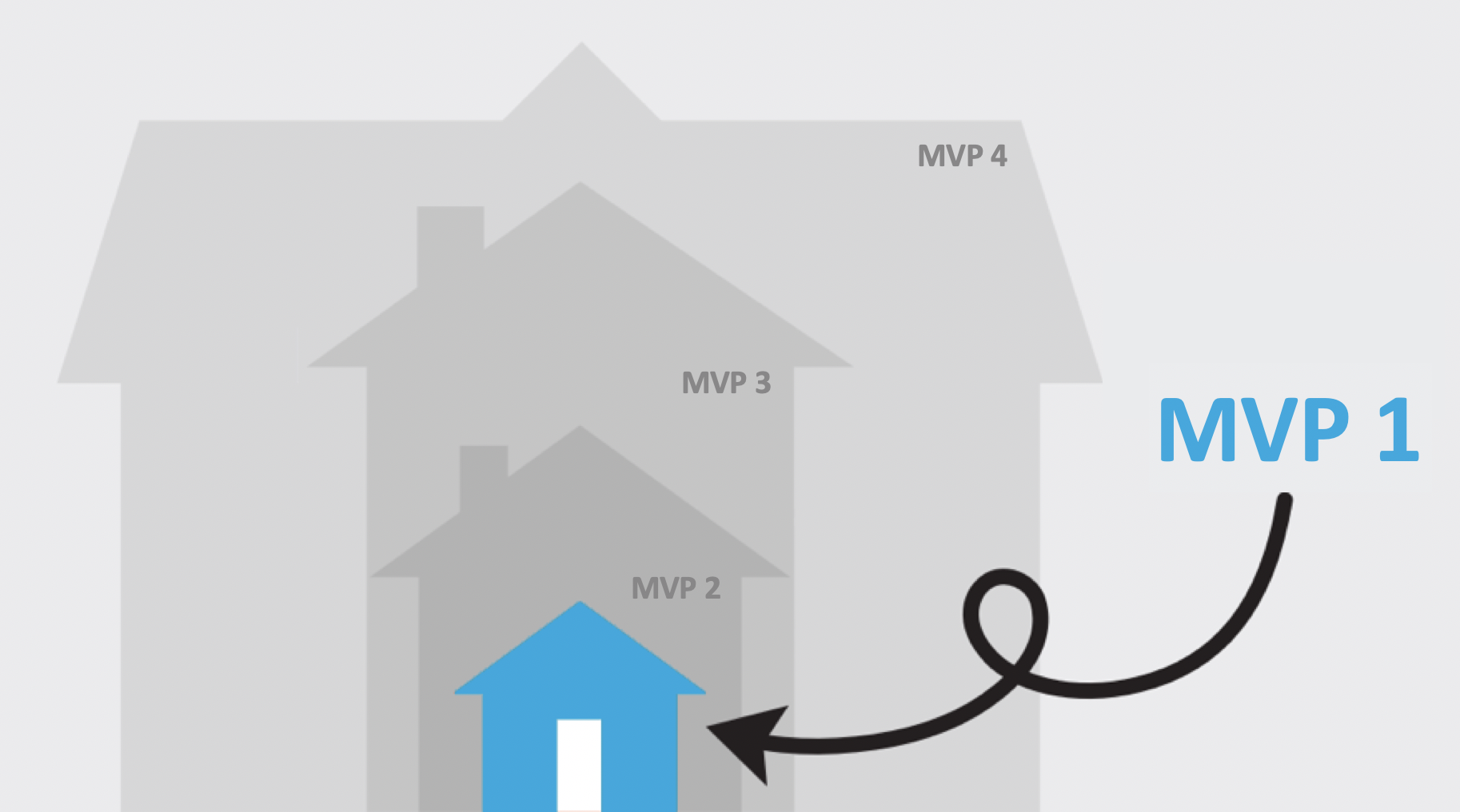

An MVP process was never meant to be a singular noun. In the startup world, it was useful to think about it in that manner because most startups had one big thing to think about proving and were routinely failing at that first hurdle. In a corporate setting however we're better off thinking of a series of layered MVPs - one for each iteration.

Each product will be a journey. Each stage of the journey will be an iteration and for each iteration you should be asking the following questions:

- What is my current iteration hypothesis?

- What metric shows me I've been successful?

- To stop me overinvesting, what is the minimum product I can build to viably test this hypothesis?

A Real Life Example

Let's take a large global business in the consumer products space. They have a small army of sales agents who look after sets of bricks and mortar stores - some large, some small. The agents regularly go out into the field to try and sell more product to the stores they oversee.

Now here's the rub. Those individual agents, despite their best efforts, produce incredibly varied returns - they are acting mostly on gut feeling and some of that feeling is good, and some of it, well, bad. Given the isolated nature of what they do, they aren't basing their offered promotions, suggested SKUs or advice to retailers on any particular data beyond their own experience.

What if instead, the business could leverage all of the data, all of those gut feelings and eventual outcomes from their entire global sales force to surface the things that really work? Those insights could be shared with all to massively increase and balance the output of the sales teams.

Now let's hold fire before we pull out our cheque book to fund a multi-year blockbuster project. Rather than thinking of this as a big bang approach let's think about how this would work in iterations. Each iteration will focus on proving a hypothesis and an MVP would be created for each one that focused on proving that hypothesis:

Iteration 1: Is this remotely technically viable?

The number of times I hear the words "machine learning", "AI" or "Big data" on a daily basis makes me slightly cringe inside. Yes, some extraordinary things are possible using modern data science, but most businesses are not in a position (for example, due to data quality) to take advantage of it.

So in this first iteration let's see if some kind of model (machine learning or otherwise) can find useful insights in data we have available for a single region in a single country.

Iteration Hypothesis: We can generate insights using some clever modelling if we aggregate data from many sales agents

Success: Some insights that don't look completely crazy to a panel of experienced sales managers.

MVP Method: Excel (possibly a small amount of python code if necessary)

Estimated Active (cost) time: Variable based on data quality / complexity but approx 2 - 6 weeks.

Iteration 2: Do the insights work?

So now you have some insights, but are they any good? Should we be suggesting retailers only sell our marshmallows on Tuesday's when the moon is at it's zenith or is this a simple anomoly in the data?

There is only one way to find out.

In iteration 2 we try out our list of insights in lab conditions. What do I mean by lab conditions? I mean we get a friendly (short) list of retailers in the region and ask them (perhaps even for a fee) to try out some of our insights.

Iteration Hypothesis: Our insights generate sales uplifts when executed perfectly

Success: Statistically relevent uplift in sales for retailers vs a control group

MVP Method: Same Excel, some manual leg work (get out of the building!)

Estimated Active (cost) time: Variable based on negotiation / execution time / expected uplift: 1-2 months.

Iteration 3: Our insights are useable in real life

The fact it works on paper / lab conditions offers little predictive capability when it comes to real life. Real life gets in the way of things.

In this iteration we take the list of proven insights and divvy it up amongst the full sales team to try out in the field. NB. You'll get a lot of feedback on this one, and not all of it will make you smile.

Iteration Hypothesis: Our insights can generate value in real life conditions

Success: Statistically relevent uplift in sales for retailers vs a control group

MVP Method: Same Excel, with insights manually broken out by an analyst who then emails a customised set of insights to each sales agent for their specific stores.

Estimated Active (cost) time: Variable based on negotiation / execution time / expected uplift: 1-2 months.

Iteration 4: Early scaling

Now we're really starting to cook with gas. Our insights work. How scalable is what we've done? For most businesses, data availability and quality differ enormously across regions. If it only works for one that might still be great, but we'd probably just keep doing things manually.

Iteration Hypothesis: Our methodology can be extended to other regions

Success: Insights generated for other regions also don't look crazy to our panel of expert sales managers

MVP Method: Same Excel with a new, manual pipeline for cleaning and importing data from other regions.

Estimated Active (cost) time: Variable based on data quality / availability - 2 weeks - 6 weeks

Iteration 5: Tool scaling

Finally, we're going to invest in building something properly! In iteration 3, I hypothesise, you discovered that sales agents didn't love having to use an emailed list of insights - they use salesforce.com and moving between the systems was a major headache.

Likewise, your back office staff have started loudly complaining that the manual processes used to produce the insights for each sales agent is horribly time consuming and at risk of error. This is fantastic, the market (your users) is now pulling a solution from you. It's time to build.

Iteration Hypothesis: We can increase our sales force and back office efficiency by building our model into a tool

Success: Lower complaint rate / faster insight preperation time

MVP Method: A custom back end system which automatically cleans, processes and ingests data into a python model. The data is then pumped using API calls to a salesforce.com front end for use and tracking by the sales team.

Estimated Active (cost) time: 3 - 12 months

What happens in reality?

In reality, you are very unlikely to get all the way through one of this list of iterations without hitting a serious unknown. For the example above, the client found that whilst insights did indeed cause uplift in lab conditions, rolling them out to account managers and to other countries was to be a serious undertaking due to union restrictions and vastly different data quality between countries.

By building a series of iterations and associated MVPs, the client was able to short circuit what would have been a vastly expensive multi-year program, and redirect funds to data quality improvement projects that would form the basis for later roll outs.

Conclusion

MVPs support focus when running in test & learn iterations - allowing your project teams to get up and running faster and to fail faster. This is a good thing - although seeing multiple projects fail quickly might feel like a bitter sweet success, the reality is that it's significantly better than these projects failing years down the line after having sucked up many multiples of the original investment.

So let's throw away the lazy understanding of MVPs. Let's recognize that a team should never be planning to build just a single one. Instead, this is a layered approach, which gives each iteration a clear stage gate beyond which management can decide whether to proceed or to pivot. It is a tool which can create stepping stones of validated learning with relatively limited investment.

To reach it, managers need to be willing to let product teams build product that may appear too simple in the early iterations (manager driven scope creep is one of the biggest hurdles to proper MVPs), but in return, hold them to reporting on whether the hypothesis was successfully proved or not before letting them move on to a future stage.

With each MVP layer you will increase fidelity, scope and move the metric needle a small amount, until YES, you will reach a shift that you can describe as scale while your non MVP competitor is still trying to brush their big bang, over inflated and ultimately failing project under the carpet.